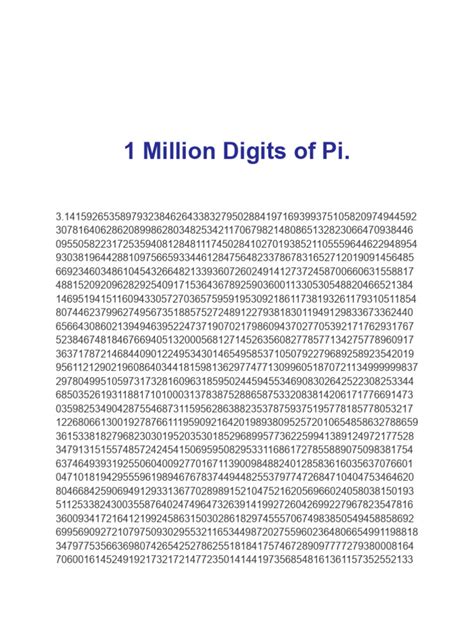

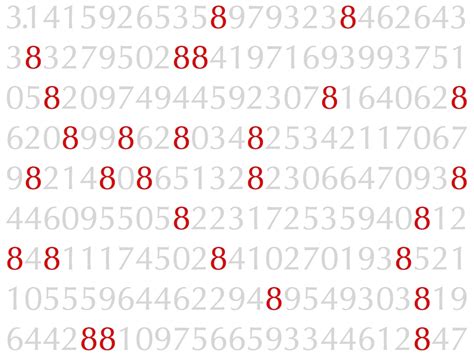

The pursuit of calculating pi (π) to an ever-increasing number of digits has long fascinated mathematicians and computer scientists. This irrational number, which represents the ratio of a circle's circumference to its diameter, has been a subject of interest for thousands of years. The ancient Greek mathematician Archimedes is credited with being the first to accurately calculate pi, using the Pythagorean theorem to estimate its value as being between 3 1/7 and 3 10/71. Since then, the calculation of pi has become a benchmark for computational power and mathematical ingenuity. Recently, the record for calculating pi has reached a new milestone: 1 billion digits.

The History of Pi Calculation

Over the centuries, mathematicians have employed various techniques to calculate pi, from geometric methods to infinite series. In the 16th century, the German mathematician Ludolph van Ceulen calculated pi to 35 digits, a record that stood for nearly 300 years. The development of computers in the 20th century revolutionized pi calculation, enabling mathematicians to compute millions of digits. In 1949, John von Neumann and his team used the ENIAC computer to calculate pi to over 2,000 digits. This marked the beginning of a new era in pi calculation, with each subsequent record-breaking calculation pushing the boundaries of computational power and mathematical techniques.

Modern Pi Calculation Techniques

Today, mathematicians and computer scientists use advanced algorithms and massive computational resources to calculate pi. The Chudnovsky algorithm, developed in the 1980s by brothers David and Gregory Chudnovsky, is a popular method for calculating pi. This algorithm uses a combination of mathematical formulas and computational techniques to calculate pi to billions of digits. Another approach is the Monte Carlo method, which uses random sampling to estimate pi. This method is less accurate than the Chudnovsky algorithm but can be used to calculate pi to millions of digits.

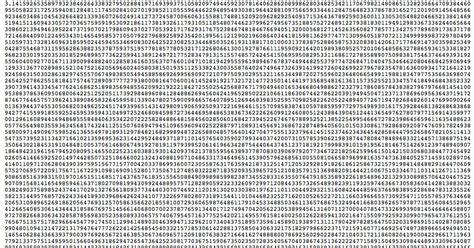

| Year | Number of Digits | Calculator |

|---|---|---|

| 1949 | 2,037 | ENIAC computer |

| 1961 | 100,000 | IBM 7090 computer |

| 1983 | 16,000,000 | Cray-1 supercomputer |

| 1999 | 206,000,000 | Hitachi SR8000 supercomputer |

| 2019 | 31,400,000,000 | Google Cloud computing platform |

Key Points

- The record for calculating pi has reached 1 billion digits, a milestone achieved through advanced computational techniques and mathematical ingenuity.

- The calculation of pi has a long history, dating back to ancient Greek mathematicians, and has become a benchmark for computational power and mathematical accuracy.

- Modern pi calculation techniques, such as the Chudnovsky algorithm and the Monte Carlo method, enable mathematicians to compute millions and billions of digits.

- The pursuit of calculating pi continues to drive innovation in computational mathematics and computer science, with potential applications in fields such as physics, engineering, and computer science.

- The calculation of pi to billions of digits raises questions about the practical applications of such calculations, but it also demonstrates the power of human ingenuity and the importance of pushing the boundaries of mathematical knowledge.

Implications and Future Directions

The calculation of pi to 1 billion digits has significant implications for mathematics, computer science, and engineering. It demonstrates the power of modern computational techniques and the importance of pushing the boundaries of mathematical knowledge. While the practical applications of such calculations may not be immediately apparent, they can lead to breakthroughs in fields such as physics, engineering, and computer science. For example, high-precision calculations of pi can be used to test mathematical theories, such as the Riemann hypothesis, and to develop new algorithms for computational mathematics.

Technical Specifications and Challenges

The calculation of pi to 1 billion digits requires significant computational resources and advanced mathematical techniques. The Chudnovsky algorithm, for example, uses a combination of mathematical formulas and computational techniques to calculate pi. However, this algorithm is highly complex and requires massive computational resources to execute. The Monte Carlo method, on the other hand, is less accurate but can be used to calculate pi to millions of digits using relatively modest computational resources.

The calculation of pi to 1 billion digits also raises significant technical challenges, such as data storage and retrieval, computational accuracy, and algorithmic efficiency. To overcome these challenges, mathematicians and computer scientists must develop new algorithms, data structures, and computational techniques that can efficiently store, retrieve, and manipulate massive amounts of data. Additionally, they must ensure that their calculations are accurate and reliable, using techniques such as error correction and validation to verify their results.

What is the significance of calculating pi to 1 billion digits?

+The calculation of pi to 1 billion digits demonstrates the power of modern computational techniques and the importance of pushing the boundaries of mathematical knowledge. It can lead to breakthroughs in fields such as physics, engineering, and computer science, and can be used to test mathematical theories and develop new algorithms for computational mathematics.

How is pi calculated to 1 billion digits?

+Pi is calculated to 1 billion digits using advanced algorithms, such as the Chudnovsky algorithm, and massive computational resources, such as supercomputers and cloud computing platforms. The calculation involves using mathematical formulas and computational techniques to estimate pi to high precision.

What are the practical applications of calculating pi to 1 billion digits?

+The practical applications of calculating pi to 1 billion digits are not immediately apparent, but they can lead to breakthroughs in fields such as physics, engineering, and computer science. High-precision calculations of pi can be used to test mathematical theories, develop new algorithms for computational mathematics, and improve our understanding of the natural world.

In conclusion, the calculation of pi to 1 billion digits is a significant achievement that demonstrates the power of modern computational techniques and the ingenuity of mathematicians and computer scientists. While the practical applications of such calculations may not be immediately apparent, they can lead to breakthroughs in fields such as physics, engineering, and computer science, and can be used to test mathematical theories and develop new algorithms for computational mathematics. As computational power and mathematical techniques continue to advance, we can expect to see even more impressive calculations of pi in the future, pushing the boundaries of human knowledge and understanding.