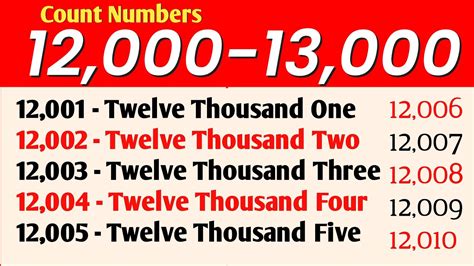

In an era dominated by big data and sweeping statistics, it's easy to dismiss small percentages as trivial or negligible. Yet, for professionals across numerous domains—finance, healthcare, marketing, and public policy—these minuscule figures often conceal profound insights and transformative potential. The phrase "20 of 13,000" exemplifies a seemingly insignificant fraction that, when examined with rigorous analytical tools, reveals meaningful patterns, rare occurrences, and subtle shifts that shape outcomes in unexpected ways. Understanding the significance of small percentages demands a nuanced appreciation of statistical precision, contextual relevance, and domain-specific implications, all of which are vital for informed decision-making and strategic foresight.

Unveiling the Power of Small Percentages in Data-Driven Environments

Across disciplines, small percentages serve as crucial indicators, especially in high-stakes contexts where slight variations can mean the difference between success and failure. For example, in the realm of healthcare, a medication that improves symptoms in just 20 out of every 13,000 patients might represent a breakthrough for a rare condition, or alternatively, a negligible effect masked by the broader statistical noise. Similarly, in finance, a 0.15% change in interest rates or asset returns—roughly 20 basis points within a 13,000-unit scale—can compound over time, generating significant gains or losses. These figures underscore a core principle: small percentages, when properly interpreted, can disproportionately influence larger systems, revealing opportunities for targeted intervention or cautionary risk assessment.

Interpreting Minuscule Data: Context Matters More Than Numbers

Contextual interpretation becomes paramount when evaluating tiny fractions like 20 out of 13,000. Consider a clinical trial with 13,000 participants, where only 20 respond favorably to a new therapy. At first glance, the response rate—approximately 0.154%—appears marginal. However, if the condition in question is life-threatening or rare, even such a small subset becomes critically significant. Furthermore, the statistical confidence interval surrounding this estimate can shift the perceived efficacy, emphasizing the importance of advanced analytical techniques such as Bayesian inference or bootstrapping to assess true impact. In this framework, the raw percentage transforms from a mere number into a contextual signal that guides therapeutic decisions, resource allocation, or further research priorities.

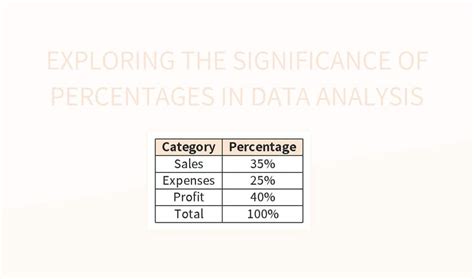

| Relevant Category | Substantive Data |

|---|---|

| Rare disease response rate | 20 out of 13,000 equates to 0.154%, potentially pivotal in niche treatment contexts |

| Financial impact per basis point | 0.01% change can compound significantly over large capital pools |

| Efficiency improvements in manufacturing | 0.2% defect reduction yields notable savings in high-volume production |

The Role of Precision and Measurement in Small Percentages

One of the key reasons small percentages are often overlooked is due to limitations in measurement precision or the potential for statistical noise overshadowing true effects. In scientific research, achieving accurate estimations of tiny fractions necessitates highly sensitive instruments and robust experimental designs. For instance, detecting a response rate of 20 in 13,000 requires sample sizes large enough to minimize type I and type II errors, emphasizing the importance of statistical power calculations and confidence intervals. Machine learning models and AI-based analytics have become instrumental in discerning signal from noise, especially when working with scarce data points. The goal is to avoid false positives that can lead to misguided policy or product decisions while recognizing genuine patterns that deserve attention.

Advanced Analytical Techniques for Small Data Effectiveness

Tools such as hierarchical modeling, Bayesian updating, and ensemble methods enable analysts to better interpret minuscule percentages. For example, Bayesian methods incorporate prior knowledge and probabilistic reasoning to refine estimates, often reducing the uncertainty associated with small samples. Within finance, Monte Carlo simulations can project potential outcomes based on marginal percentage changes, exposing the compound effects over multiple iterations. In healthcare, meta-analyses aggregate small response rates across studies, increasing statistical power and credibility of observed effects. These techniques elevate the significance of marginal figures, turning them into actionable intelligence rather than dismissible anomalies.

| Analytical Technique | Application Example |

|---|---|

| Bayesian inference | Refining rare event probability estimates with prior data |

| Monte Carlo simulations | Projecting cumulative impact of small interest rate changes |

| Meta-analysis | Aggregating tiny response rates across multiple trials for stronger evidence |

Implications for Strategy and Decision-Making

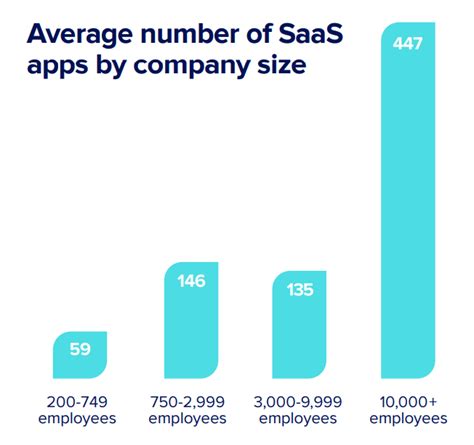

Decision-makers must recognize that small percentages, despite their seemingly marginal nature, can serve as strategic signals. For instance, in quality control, a defect rate of 0.2%—or 20 defects per 10,000 units—might be acceptable theoretically, but persistent small percentages can cumulatively undermine brand reputation and customer loyalty. Conversely, in targeted marketing, reaching 20 individuals out of 13,000 can signify a niche segment ripe for specialized campaigns, which could yield disproportionate returns given the low cost of outreach. Therefore, understanding the structural significance of tiny figures enables more nuanced prioritization and resource deployment, especially in environments where precision is vital.

Balancing Cost-Benefit with Small Percentage Insights

The challenge lies in discerning when investing resources to interpret or act on small percentages is warranted. Is the cost of further investigation justified by potential gains? Does the domain context significantly amplify or diminish the impact? As an example, in defect detection systems, reducing the error margin from 0.2% to 0.1% involves substantial technological upgrades. Yet, if the baseline defect rate is already extremely low, those incremental improvements may not be cost-effective. Conversely, in risk-sensitive environments like aviation safety, small percentages—such as a 0.02% failure rate—can translate into catastrophic outcomes, demanding relentless focus on tiny figures. Balancing these considerations requires a thorough understanding that small percentages are not inherently insignificant but contextually potent.

Key Points

- Small percentages obscure significant systemic effects, especially in high-reliability fields.

- Domain expertise combined with advanced statistical methods enhances interpretive accuracy.

- Contextualization transforms trivial figures into critical signals, informing strategic action.

- Cost-benefit analysis is essential to determine when small percentages merit attention.

- Technological innovations amplify our capability to detect and utilize minor data patterns effectively.

Evolutionary Significance and Future Trends

The importance of small percentages is not merely a contemporary phenomenon; it has deep roots in the evolution of scientific inquiry and technological progress. Historically, breakthroughs often stemmed from meticulous observations of rare events or subtle deviations—carrying implications far beyond their superficial insignificance. For instance, the discovery of penicillin was based on noticing minuscule bacterial growth inhibition zones, yet it revolutionized medicine. Moving forward, the fusion of big data analytics with high-precision measurement tools promises an era where tiny percentages will no longer be dismissed but understood as gateways to innovation. Artificial intelligence, enhanced sensor systems, and increasingly sophisticated modeling techniques are poised to elevate our capacity to interpret and leverage these fractions at an unprecedented scale.

Emerging Technologies Enhancing Small Percentage Analysis

Emerging trends, such as quantum computing, promise exponential gains in processing capabilities, enabling the analysis of vast datasets and extremely rare events. Similarly, developments in nanotechnology and bioinformatics facilitate measurement at molecular or atomic scales, revealing tiny variations that influence larger biological or physical systems. These technological leaps will inevitably lead to more precise understanding of small percentages, unlocking insights that can reshape industries ranging from personalized medicine to environmental monitoring. The future of “20 of 13,000”—or similarly tiny fractions—is bright with possibilities, provided we continue refining our analytical frameworks and interpretive paradigms.

How do small percentages impact real-world decision-making?

+Small percentages often serve as early warning signals or indicators of systemic issues. In healthcare, they can hint at rare side effects; in finance, they may foretell emerging market shifts. Their impact depends heavily on context, but when combined with expert analysis and robust modeling, they can lead to proactive strategies that prevent crises or capitalize on niche opportunities.

What statistical methods are best suited for analyzing tiny data effects?

+Bayesian inference, hierarchical modeling, and Monte Carlo simulations stand out as effective tools. They accommodate small sample sizes, incorporate prior knowledge, and allow probabilistic reasoning. The choice depends on the domain, data quality, and specific research questions, but combining these methods often yields the most reliable insights into obscure or rare phenomena.

Are small percentages always worth acting upon?

+Not necessarily. The decision hinges on cost-benefit considerations and the potential for small effects to cascade into larger systemic impacts. In fields like safety-critical industries, even tiny failure rates warrant attention. In other areas, resource constraints and diminishing returns may suggest focusing elsewhere unless the small percentage signals a crucial underlying shift.

How can technological innovation improve small percentage analysis?

+Advances such as quantum computing, nano-sensors, and AI-based pattern recognition substantially increase our ability to detect, quantify, and interpret tiny variations. These tools reduce noise, enhance measurement precision, and facilitate real-time analysis, enabling stakeholders across sectors to make informed decisions based on previously unnoticed data points.