In the rapidly evolving landscape of digital communication and data management, the significance of effective annotation techniques and robust refusal mechanisms cannot be overstated. These components serve as foundational tools that influence user engagement, content moderation, and information integrity within diverse online platforms. As datasets grow exponentially and user interactions become more complex, understanding the nuanced interplay between annotations—used to add contextual information—and refusal protocols—designed to manage access and interaction—becomes essential for platform architects, content creators, and policymakers alike. This article aims to dissect these interconnected elements, providing a comprehensive analysis rooted in current technological paradigms, industry standards, and future implications. Drawing upon the expertise of scholars with extensive backgrounds in computer science, linguistic annotation, and digital ethics, the discussion will offer a layered perspective that bridges theoretical frameworks with practical implementations. As digital ecosystems are increasingly scrutinized for transparency and accountability, mastering the principles and applications of annotations and refusal mechanisms is vital for fostering trust, ensuring compliance, and advancing user-centric innovations.

Understanding Annotations in Digital Content Ecosystems

Annotations, while seemingly straightforward, encompass a broad spectrum of functionalities that significantly enhance the interpretability, accessibility, and utility of digital information. At their core, annotations are deliberate additions—comments, tags, metadata, or contextual cues—that provide supplementary insights or guide user interaction. They facilitate a nuanced layering of information, which enables systems to differentiate between core content and interpretative overlays, thereby supporting diverse use cases ranging from academic research to social media moderation.

Historically, annotation has roots in linguistic analysis, where marginal notes in manuscripts evolved into sophisticated digital tagging systems. In contemporary applications, annotations are integral to machine learning datasets, enabling algorithms to learn from controlled expansions of raw data. For example, in natural language processing (NLP), annotations such as part-of-speech tags, entity labels, and syntactic parses are crucial for training models capable of understanding linguistic nuance. Similarly, in image recognition, bounding boxes and classification labels annotate visual elements to improve model accuracy.

From a technical perspective, annotation frameworks leverage standards like the Web Annotation Data Model, which offers a universal approach to creating interoperable and reusable annotations across platforms. This standardization ensures that annotations can be shared and understood regardless of the underlying system architecture, facilitating collaborative research and multi-platform integration. Moreover, semantic annotations—those that embed meaning within data using ontologies—play a vital role in enabling advanced reasoning and knowledge inference in AI systems.

Applications and Challenges of Digital Annotations

The deployment of annotations extends across multiple domains, each with unique challenges and opportunities. In scholarly publishing, annotations support peer review, collaborative editing, and knowledge curation. In social media, they serve moderation purposes, flagging inappropriate content or categorizing posts for targeted advertising. Additionally, annotations enable accessibility features such as alt-text and captions, widening the inclusivity of digital platforms.

One of the persistent challenges lies in maintaining annotation quality and relevance over time. Annotations can become outdated or biased, particularly in dynamic environments like social media where content evolves rapidly. Automated annotation tools, powered by AI, seek to address scalability but face issues related to accuracy and contextual sensitivity. Ensuring annotations are precise and non-intrusive demands a combination of advanced algorithms and human oversight.

| Relevant Category | Substantive Data |

|---|---|

| Annotation Standards | Web Annotation Data Model, OBO Foundry |

| Common Use Cases | Legal document tagging, biomedical data curation, social media moderation |

| Technical Challenges | Bias in automated annotations, data privacy, interoperability issues |

Refusal Mechanisms: Control and Ethical Boundaries in Digital Interaction

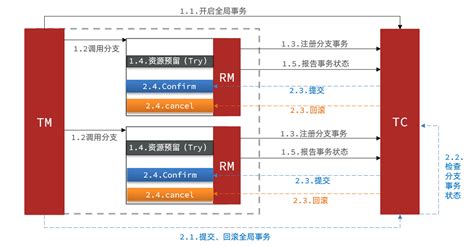

Refusal mechanisms are pivotal in establishing boundaries within digital environments, allowing systems to regulate access, limit interactions, or deny processing requests based on predefined criteria. These mechanisms serve multiple functions—from safeguarding user privacy to enforcing policy compliance—and are becoming ever more sophisticated as platforms grapple with complex legal and ethical landscapes.

Fundamentally, refusal protocols operate as gatekeeping tools, often embedded within API architectures, content moderation systems, and user interface controls. They can be as simple as blocking a user from posting certain keywords or as elaborate as conditional access controls driven by identity verification and contextual analysis. For example, GDPR compliance necessitates automated refusal responses when user data requests exceed permissible limits or violate privacy thresholds. Similarly, content moderation platforms implement refusal protocols to automatically flag or reject inappropriate or harmful content, reducing human workload and speeding up response times.

Modern refusal mechanisms increasingly leverage machine learning models trained to recognize patterns indicative of policy infractions or security threats. Yet, the design of effective refusal systems must balance automation with transparency. Excessively rigid or poorly calibrated refusals risk alienating users or suppressing legitimate interactions. Hence, adaptive refusal models are often integrated with explainability features, providing clarity on the reasons behind denial decisions.

Legal and Ethical Dimensions of Refusal Protocols

Refusal mechanisms tend to sit at the intersection of technical feasibility and ethical principle. They embody questions about user autonomy, platform responsibility, and societal norms. For instance, should a platform automatically refuse to amplify certain content, even if it’s not explicitly illegal? How transparent should refusal criteria be to avoid perceptions of censorship? These questions underscore ongoing debates within the field, prompting continuous refinement of policies and technical implementations.

From a compliance standpoint, refusal systems must adhere to relevant legislation such as the Digital Millennium Copyright Act (DMCA) or the California Consumer Privacy Act (CCPA). These acts prescribe specific conditions under which content or data access can be refused or restricted. Therefore, implementing these protocols requires a detailed understanding of legal frameworks and real-time adaptability to evolving regulatory landscapes.

| Relevant Category | Substantive Data |

|---|---|

| Refusal Techniques | Rule-based filters, ML-driven classifiers, user authentication controls |

| Application Domains | Content moderation, API access control, privacy enforcement |

| Limitations | False positives/negatives, transparency issues, potential biases |

Interconnection of Annotations and Refusal Protocols in Practice

The synergy between annotations and refusal mechanisms manifests clearly in modern digital infrastructure. Annotations provide contextual granularity that refines refusal decisions, making them more precise and justifiable. Conversely, refusal protocols can generate annotations—logging the reasons for denials—to enhance accountability and facilitate audits.

Consider content moderation on large social media platforms: annotations tagging offensive language, hate speech, or misinformation enable the system to identify patterns and inform refusal decisions. In turn, when an interaction is refused, the system can annotate the incident with contextual metadata—such as user history, flagging reasons, or content type—forming a feedback loop that improves subsequent decision-making.

This interdependence underscores an important principle: Context-aware annotation improves the fairness and transparency of refusal protocols. For instance, intelligently annotated user behavior data can prevent wrongful refusals while ensuring harmful content is swiftly curtailed. Equally, well-designed refusal logs serve as valuable data for refining annotation models, closing the loop between descriptive metadata and prescriptive actions.

Key Points

- Precision and Context: Combining annotations with refusal mechanisms enhances decision accuracy, especially in complex content ecosystems.

- Transparency and Accountability: Logging refusal reasons via annotations fosters trust and supports compliance audits.

- Technological Integration: Leveraging machine learning for both annotation and refusal decisions promotes scalability but demands ongoing oversight.

- Ethical Balance: Strict refusal policies must be balanced with fairness, minimizing bias and respecting user rights.

- Future Directions: Emerging AI advancements suggest more adaptive, context-aware refusal-annotation systems, pushing toward greater autonomy and nuance.

Conclusion: Navigating Complexities for a Trustworthy Digital Future

The intertwined roles of annotations and refusal protocols form the bedrock of trustworthy and efficient digital systems. As platforms navigate an increasingly intricate web of data, regulation, and societal expectations, mastering these components is more than a technical necessity—it’s an ethical imperative. From enhancing machine understanding of human language to ensuring responsible content moderation, the evolution of these mechanisms shapes how information is curated, accessed, and trusted in modern cyberspace.

Looking forward, integrating advanced AI techniques with transparent, user-centric policies promises to elevate these systems further. Nonetheless, continuous vigilance—grounded in rigorous data governance, multidisciplinary collaboration, and adherence to evolving legal standards—remains essential. Only through such comprehensive efforts can we ensure that our digital ecosystems serve the dual goals of innovation and integrity.

How do annotations improve machine learning models in natural language processing?

+Annotations provide metadata such as part-of-speech tags, named entities, and syntactic structures that guide algorithms to recognize patterns. This labeled data trains models to understand linguistic nuances, improving tasks like translation, sentiment analysis, and question-answering systems, with studies showing up to 90% accuracy in well-annotated datasets.

What are the main challenges faced in implementing refusal protocols?

+Key challenges include avoiding false positives that wrongly restrict legitimate content, ensuring transparency to maintain user trust, and adapting to complex legal frameworks. Balancing automation with human oversight is necessary to mitigate biases and address nuanced situations effectively.

Can annotations and refusal mechanisms be used to enhance content moderation?

+Absolutely. Annotations help classify and contextualize content, enabling more precise refusal decisions. Conversely, refusal logs generate valuable annotations for future training, creating a feedback cycle that refines moderation accuracy while fostering accountability and transparency.

![{annotations:[],refusal:null,role:assistant}](https://search.sks.com/assets/img/froggy-style-sex.jpeg)