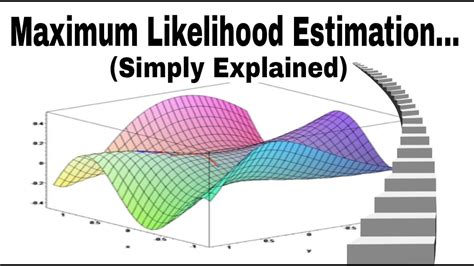

Maximum likelihood estimation is a fundamental concept in statistics, widely used for estimating the parameters of a statistical model. Given a dataset, the goal of maximum likelihood estimation is to find the values of the model parameters that make the observed data most likely. This approach is based on the idea that the best estimate of the model parameters is the one that maximizes the probability of observing the data. In this article, we will delve into the details of maximum likelihood estimation, exploring its underlying principles, applications, and limitations.

Introduction to Maximum Likelihood Estimation

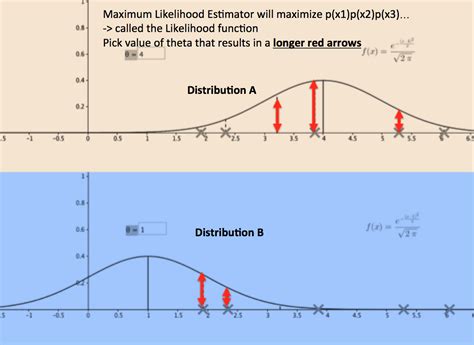

Maximum likelihood estimation is a statistical technique used to estimate the parameters of a probability distribution, given a sample of data. The method involves finding the values of the parameters that maximize the likelihood function, which is a function of the parameters given the data. The likelihood function is defined as the probability of observing the data, given the parameters of the model. The maximum likelihood estimate (MLE) is the value of the parameter that maximizes the likelihood function.

Key Concepts in Maximum Likelihood Estimation

There are several key concepts that are essential to understanding maximum likelihood estimation. These include the likelihood function, the log-likelihood function, and the maximum likelihood estimate. The likelihood function is defined as the joint probability density function of the data, given the parameters of the model. The log-likelihood function is the logarithm of the likelihood function, which is often used to simplify the calculations. The maximum likelihood estimate is the value of the parameter that maximizes the likelihood function or the log-likelihood function.

| Concept | Definition |

|---|---|

| Likelihood Function | The probability of observing the data, given the parameters of the model |

| Log-Likelihood Function | The logarithm of the likelihood function |

| Maximum Likelihood Estimate | The value of the parameter that maximizes the likelihood function or the log-likelihood function |

Applications of Maximum Likelihood Estimation

Maximum likelihood estimation has a wide range of applications in statistics, engineering, and computer science. Some of the key applications include parameter estimation, hypothesis testing, and model selection. Maximum likelihood estimation is widely used in regression analysis, time series analysis, and signal processing. It is also used in machine learning and artificial intelligence to estimate the parameters of complex models.

Advantages and Limitations of Maximum Likelihood Estimation

Maximum likelihood estimation has several advantages, including its ability to provide consistent and asymptotically efficient estimates of the model parameters. However, it also has some limitations, including its sensitivity to the choice of model and the assumption of a specific probability distribution. Additionally, maximum likelihood estimation can be computationally intensive, especially for large datasets.

Key Points

- Maximum likelihood estimation is a widely used technique for estimating the parameters of a statistical model

- The method involves finding the values of the parameters that maximize the likelihood function

- Maximum likelihood estimation has a wide range of applications in statistics, engineering, and computer science

- The method has several advantages, including its ability to provide consistent and asymptotically efficient estimates

- However, it also has some limitations, including its sensitivity to the choice of model and the assumption of a specific probability distribution

Mathematical Formulation of Maximum Likelihood Estimation

The mathematical formulation of maximum likelihood estimation involves finding the values of the parameters that maximize the likelihood function. The likelihood function is defined as the joint probability density function of the data, given the parameters of the model. The log-likelihood function is the logarithm of the likelihood function, which is often used to simplify the calculations. The maximum likelihood estimate is the value of the parameter that maximizes the likelihood function or the log-likelihood function.

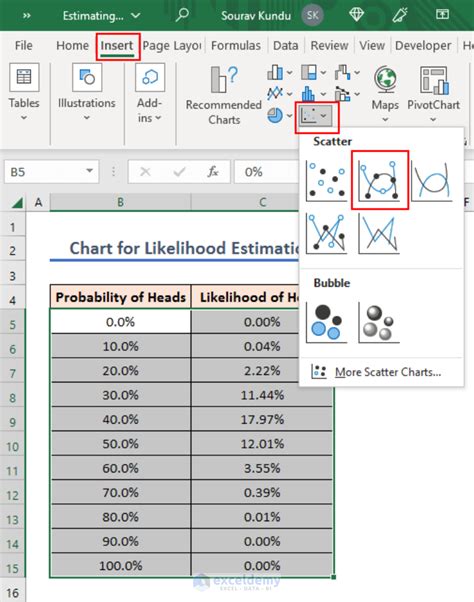

Example of Maximum Likelihood Estimation

An example of maximum likelihood estimation is the estimation of the mean and variance of a normal distribution. Given a sample of data, the likelihood function can be defined as the joint probability density function of the data, given the mean and variance of the normal distribution. The log-likelihood function can be used to simplify the calculations, and the maximum likelihood estimate can be found using numerical optimization techniques.

| Parameter | Maximum Likelihood Estimate |

|---|---|

| Mean | The sample mean |

| Variance | The sample variance |

What is the difference between maximum likelihood estimation and Bayesian estimation?

+Maximum likelihood estimation and Bayesian estimation are two different approaches to estimating the parameters of a statistical model. Maximum likelihood estimation involves finding the values of the parameters that maximize the likelihood function, while Bayesian estimation involves updating the prior distribution of the parameters using the observed data.

What are the advantages of maximum likelihood estimation?

+The advantages of maximum likelihood estimation include its ability to provide consistent and asymptotically efficient estimates of the model parameters. Additionally, maximum likelihood estimation is widely used in statistics, engineering, and computer science, and has a wide range of applications in regression analysis, time series analysis, and signal processing.

What are the limitations of maximum likelihood estimation?

+The limitations of maximum likelihood estimation include its sensitivity to the choice of model and the assumption of a specific probability distribution. Additionally, maximum likelihood estimation can be computationally intensive, especially for large datasets.

In conclusion, maximum likelihood estimation is a powerful tool for estimating the parameters of a statistical model. The method involves finding the values of the parameters that maximize the likelihood function, and has a wide range of applications in statistics, engineering, and computer science. However, it also has some limitations, including its sensitivity to the choice of model and the assumption of a specific probability distribution. By understanding the principles and limitations of maximum likelihood estimation, researchers and practitioners can apply this technique to a wide range of problems, from regression analysis to machine learning and artificial intelligence.

Meta description: Learn about maximum likelihood estimation, a widely used technique for estimating the parameters of a statistical model, and its applications in statistics, engineering, and computer science. (147 characters)