Uniform variance, a fundamental concept in statistics and data analysis, refers to the consistency of variance across different levels of an independent variable or across different groups within a dataset. The principle is crucial in various statistical analyses, including regression analysis, where the assumption of uniform variance (homoscedasticity) is essential for the validity of the results. Violations of this assumption can lead to incorrect conclusions about the relationships between variables. In this article, we will delve into five ways uniform variance works, exploring its implications, applications, and the methods used to detect and address non-uniform variance.

Key Points

- Uniform variance is essential for the validity of many statistical analyses, ensuring that the spread of data points is consistent across different groups or levels of an independent variable.

- The assumption of uniform variance can be tested using various methods, including visual inspections, Levene's test, and the Breusch-Pagan test.

- Transforming data, such as through logarithmic or square root transformations, can help achieve uniform variance when it is not initially met.

- Generalized linear models (GLMs) and generalized additive models (GAMs) offer alternatives to traditional linear regression when data do not meet the assumption of uniform variance.

- Understanding and addressing non-uniform variance is critical for making accurate predictions and drawing valid conclusions in data analysis.

The Importance of Uniform Variance in Statistical Analysis

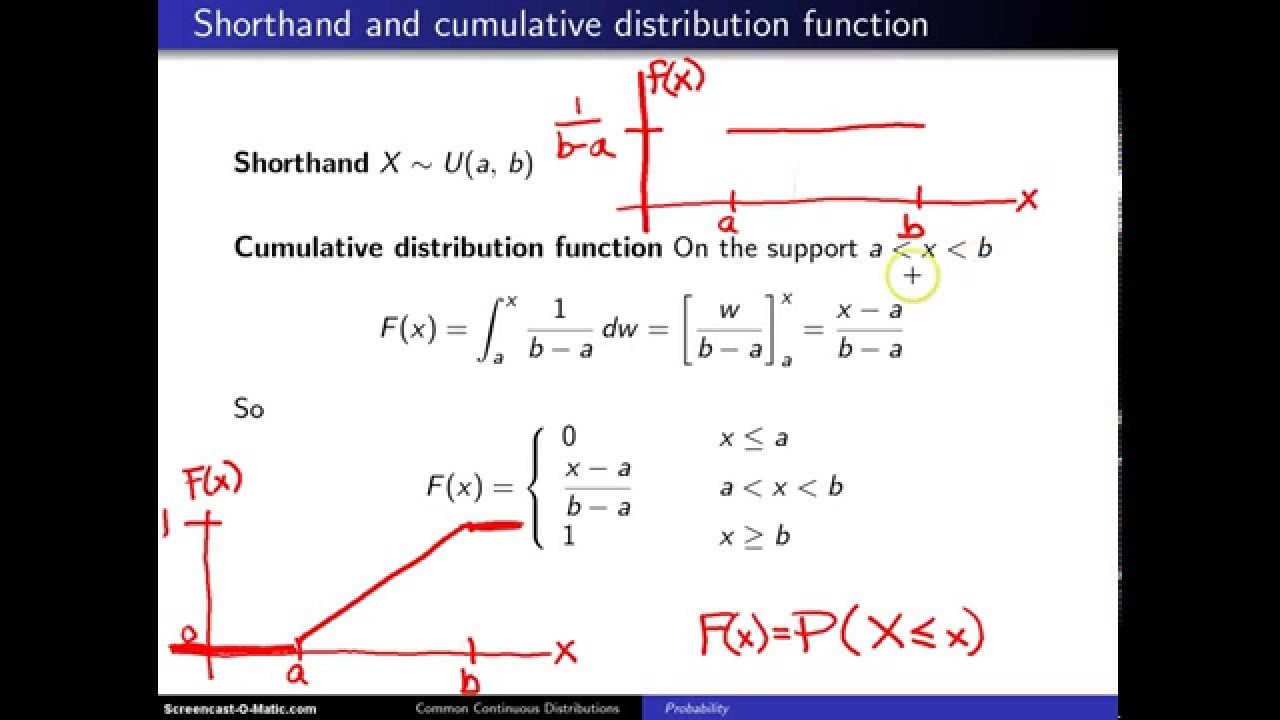

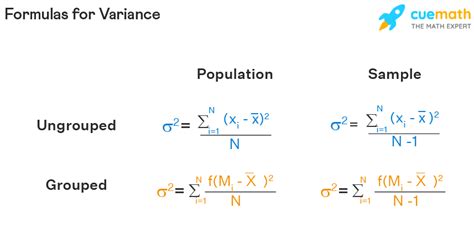

Uniform variance plays a pivotal role in ensuring the reliability and accuracy of statistical models. It is a key assumption in many statistical tests and models, including analysis of variance (ANOVA), regression analysis, and t-tests. When variance is uniform, it implies that the data points are equally spread out across different levels of the independent variable, which is crucial for these tests to provide unbiased estimates of effects and for making valid inferences.

In the absence of uniform variance, the results of statistical analyses can be misleading. For instance, in regression analysis, non-uniform variance can lead to inefficient estimates of regression coefficients and incorrect conclusions about the significance of predictors. Therefore, it is essential to check for uniform variance and to take corrective measures when this assumption is violated.

Methods for Checking Uniform Variance

Several methods are available for checking the assumption of uniform variance, including visual inspections, such as plotting residuals against fitted values, and formal statistical tests, like Levene’s test and the Breusch-Pagan test. Visual inspections can provide a preliminary indication of whether variance is consistent across different levels of an independent variable, while statistical tests offer a more rigorous assessment.

Levene’s test, for example, is commonly used to assess whether the variance of the residuals is equal across groups. It calculates the absolute differences between each data point and the group mean, then performs an ANOVA on these differences to test the null hypothesis that the variance is equal across all groups. If the null hypothesis is rejected, it indicates that the variance is not uniform.

Addressing Non-Uniform Variance

When data do not meet the assumption of uniform variance, several strategies can be employed to address this issue. One common approach is to transform the data. For example, if the variance increases with the mean, a logarithmic transformation might stabilize the variance. Other transformations, such as square root or inverse transformations, can also be effective, depending on the nature of the relationship between the mean and variance.

In addition to data transformation, alternative statistical models can be used. Generalized linear models (GLMs) and generalized additive models (GAMs) are flexible modeling approaches that can accommodate non-uniform variance by allowing the variance to be a function of the mean. These models are particularly useful when the relationship between variables is not linear or when the data exhibit characteristics that violate the assumptions of traditional linear regression.

| Statistical Method | Purpose | Description |

|---|---|---|

| Levene's Test | Testing Uniform Variance | Assesses if the variance of the residuals is equal across groups. |

| Logarithmic Transformation | Data Transformation | Stabilizes variance when it increases with the mean. |

| Generalized Linear Models (GLMs) | Alternative Modeling | Accommodates non-uniform variance by modeling variance as a function of the mean. |

Implications of Non-Uniform Variance

The implications of non-uniform variance can be profound, affecting not only the validity of statistical analyses but also the interpretation of results. In fields such as medicine, finance, and social sciences, where data analysis informs critical decisions, ensuring that the assumptions underlying statistical models are met is paramount. Ignoring non-uniform variance can lead to overestimation or underestimation of effects, potentially resulting in misguided policies, ineffective interventions, or harmful decisions.

Furthermore, the presence of non-uniform variance can signal underlying issues with the data collection process or the theoretical framework guiding the analysis. For instance, it might indicate that the relationship between variables is more complex than initially assumed or that there are unaccounted factors influencing the data. Thus, addressing non-uniform variance is not only a statistical necessity but also an opportunity to refine theoretical models and improve the quality of data collection methods.

Forward-Looking Implications

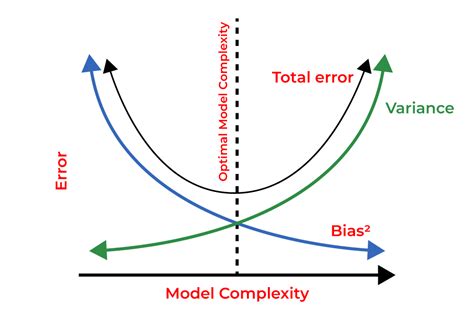

As data analysis continues to play an increasingly critical role in decision-making across various sectors, the importance of uniform variance will only grow. The development of new statistical methods and models that can effectively handle non-uniform variance will be crucial. Moreover, enhancing awareness and understanding of the concept among practitioners and researchers will be essential for ensuring that data-driven insights are reliable and actionable.

The integration of machine learning and artificial intelligence techniques with traditional statistical methods also presents opportunities for more effectively addressing non-uniform variance. These approaches can offer more flexible and robust ways to model complex relationships and variability in data, potentially leading to more accurate predictions and a deeper understanding of the underlying mechanisms driving the data.

What is uniform variance, and why is it important in statistical analysis?

+Uniform variance refers to the consistency of variance across different levels of an independent variable or across different groups within a dataset. It is crucial because many statistical tests and models, including regression analysis and ANOVA, assume uniform variance to provide unbiased estimates and valid inferences.

How can non-uniform variance be addressed in data analysis?

+Non-uniform variance can be addressed through data transformation, such as logarithmic or square root transformations, and by using alternative statistical models like generalized linear models (GLMs) and generalized additive models (GAMs) that can accommodate varying variance structures.

What are the implications of ignoring non-uniform variance in statistical analysis?

+Ignoring non-uniform variance can lead to incorrect conclusions about the relationships between variables, inefficient estimates of effects, and potentially harmful decisions based on flawed analysis. It underscores the importance of checking for and addressing non-uniform variance in data analysis.

Meta Description: Understanding and addressing uniform variance is crucial for the validity and reliability of statistical analyses. Explore the importance of uniform variance, methods for checking it, and strategies for addressing non-uniform variance in data analysis.