Understanding unit conversions is fundamental across various fields, from engineering and manufacturing to everyday measurements. Among these, the transition between inches and millimeters is particularly commonplace, especially given the global shift towards metric standards and the persistent use of imperial units in certain industries. The conversion process, while seemingly straightforward, requires precise understanding and application to ensure accuracy, especially when dealing with critical specifications in technical contexts. This guide aims to demystify the conversion of 5 inches into millimeters, providing clarity and practical insights rooted in established measurement principles. Recognizing the importance of precision, especially for professionals involved in design, machining, and quality control, this comprehensive analysis will combine technical accuracy with accessible explanation, ensuring that users of varying expertise levels gain a thorough understanding of the process.

Key Points

- Exact conversion factors underpin precise measurement translation from inches to millimeters.

- Understanding the historical context of units aids in more effective application.

- Applying conversion constants correctly avoids costly errors in manufacturing and engineering.

- Recognizing the nuances of measurement accuracy enhances quality assurance efforts.

- Practical tips for quick estimation provide value in rapid prototyping and fieldwork.

Fundamentals of Length Measurement and Unit Systems

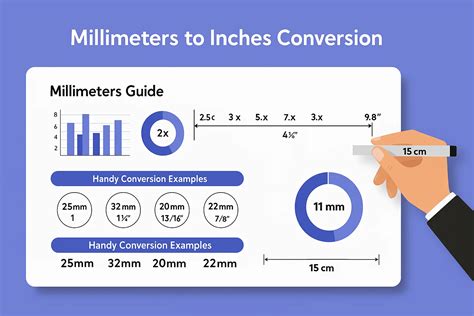

Length measurement units are foundational to countless scientific and industrial processes. The imperial system, with inches as a key unit, has roots dating back centuries to early English measurement standards. Conversely, the metric system, established in France during the late 18th century, standardized measurement units globally, including the millimeter, which is part of the SI (International System of Units). While these systems historically evolved independently, their interdisciplinary overlap persists, demanding accurate conversion methods. In practice, the conversion between inches and millimeters not only facilitates international trade but also ensures compliance with engineering tolerances and design specifications.

Historical Context and Evolution of Units

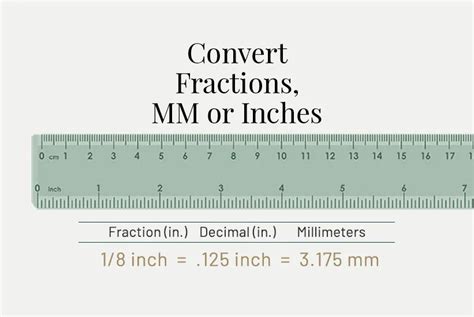

The inch originated from various traditional length measures used across different regions, often based on body parts or conventional standards. Its definition has evolved, but today it is precisely defined as exactly 25.4 millimeters, as codified by international standards since 1959. This standardization was driven by the need for consistency as industries expanded globally, mitigating errors caused by regional variations. The millimeter, as the smallest unit in the centimeter-gram-second metric system, provides a high degree of precision in measurement, especially valuable for detailed engineering tasks.

Mathematical Foundations of the Conversion Process

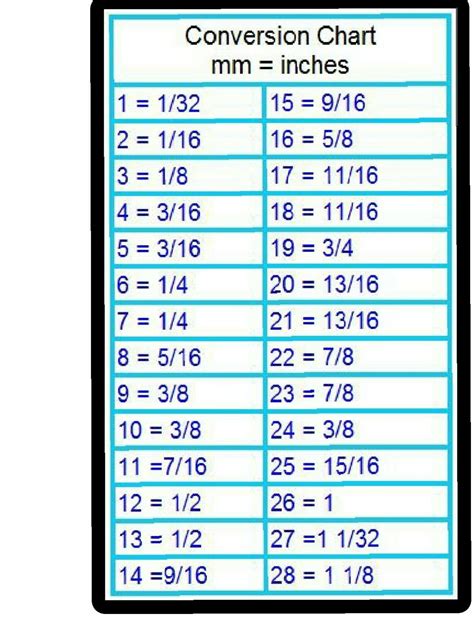

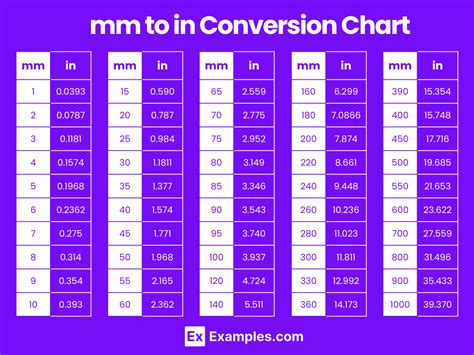

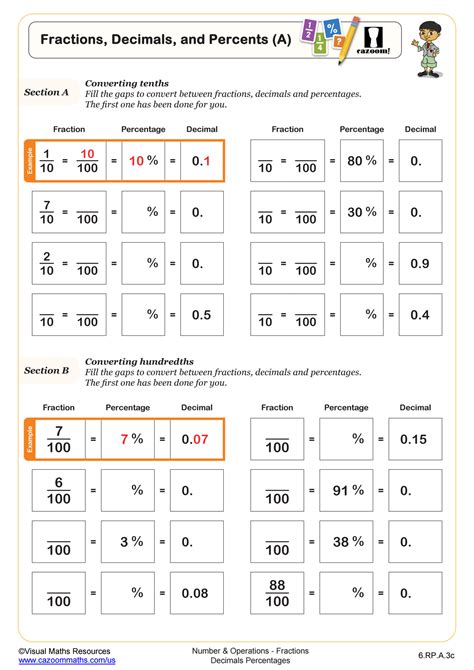

The core of converting 5 inches into millimeters lies in understanding and applying the fixed conversion constant. Given that one inch equals exactly 25.4 millimeters, the calculation process involves a straightforward multiplication:

- Conversion factor: 1 inch = 25.4 mm

- Quantitative expression: 5 inches × 25.4 mm/inch

Executing this calculation yields the precise measurement in millimeters:

| Conversion Step | Calculation | Result |

|---|---|---|

| Inches to millimeters | 5 × 25.4 | 127 mm |

This exact value ensures accuracy in technical applications where even minor discrepancies can lead to material failures or design flaws.

Typical Use Cases and Practical Applications

The straightforward conversion from inches to millimeters is a staple procedure for engineers, machinists, and designers alike. For example, in CNC (Computer Numerical Control) machining, dimensions often need to be specified precisely in millimeters, even if the original design was made using imperial units. Accurate conversions ensure parts fit together correctly, eliminating costly reworks or malfunctions. Furthermore, in sectors like healthcare—where measurements of prosthetics or medical devices must adhere to strict tolerances—correct unit conversion underpins safety and functionality.

Measurement in Manufacturing and Quality Control

In manufacturing, particularly in industries such as automotive and aerospace, adherence to dimensional tolerances is non-negotiable. When tolerances are specified in millimeters, converting from inch-based measurements ensures conformity with international standards and simplifies communication between global teams. Precise conversion also plays a role in inspection processes, where micrometer or coordinate measuring machines (CMMs) record tiny differences—errors of just a fraction of a millimeter can mean the difference between a passing or failing part.

| Application Area | Typical Size Measure |

|---|---|

| Machining tolerances | ±0.1 mm |

| Medical device dimensions | 1–10 mm precision required |

| Structural component gaps | Measured typically in millimeters for accuracy |

Common Pitfalls and Best Practices in Conversion

While the calculation is mathematically simple, human errors and misapplications of conversion standards can lead to inaccuracies. One common mistake is relying on approximate conversion factors, such as 1 inch ≈ 25.4 mm, without understanding that this value is exact and mandated by international standards. Using non-standard or rounded figures, like 25 mm for simplicity, compromises precision, especially in the final stages of design or fabrication.

Strategies to Ensure Conversion Accuracy

- Always Use Exact Constants: Rely on the fixed value of 25.4 mm per inch. Never approximate unless in preliminary estimations.

- Double-Check Calculations: Employ calculators or software that uses the exact conversion constants to avoid manual errors.

- Contextual Verification: Cross-reference measurements with known standards or calibration data in measurement instruments.

- Consistency in Units: Maintain unit consistency throughout the workflow—convert all dimensions before starting detailed analysis.

The Broader Significance and Future Trends

The shift toward metric measurement in global industries is part of a larger trend toward standardization, which enhances interoperability and simplifies technical communication. The precise conversion of imperial units, like inches, into metric units, like millimeters, remains vital in sectors where legacy designs coexist with modern manufacturing processes. Technological advancements, including digital measurement recording and real-time conversion tools, are making this transition more seamless.

Furthermore, emerging industries such as additive manufacturing (3D printing) and biometrics continually emphasize the need for high-precision measurement conversions. For example, in biomedical devices, creating components with tolerances less than a millimeter demands not only advanced measurement techniques but also robust understanding of conversion standards.

Emerging Trends and Technological Innovations

- Advanced CAD software integrating automatic unit conversion capabilities, minimizing manual input errors.

- Smart measurement devices capable of real-time conversions, data logging, and error alerts.

- Global standard initiatives aiming to harmonize measurement units across industries, facilitating international collaboration.

Conclusion: The Practical Importance of Accurate Conversion

While converting five inches to millimeters may seem like a straightforward calculation, its importance resonates across diverse domains—from precision engineering to everyday DIY projects. Recognizing that 1 inch equals exactly 25.4 millimeters allows professionals and hobbyists alike to translate measurements confidently, ensuring compatibility, safety, and quality. Emphasizing the fixed nature of this conversion constant, integrating best practices, and utilizing technological tools elevate the precision and efficiency of measurement tasks. As industries evolve and standards become more interconnected, mastering the nuances of unit conversion remains an essential skill—one that underpins the integrity of countless products and systems worldwide.

What is the exact value of 1 inch in millimeters?

+One inch is precisely defined as 25.4 millimeters, a standard established by international agreement in 1959 to ensure consistency across industries and applications.

How do I convert inches to millimeters manually?

+Multiply the number of inches by 25.4. For example, 5 inches × 25.4 = 127 millimeters. This straightforward multiplication provides an exact conversion result.

Are there digital tools to assist with unit conversions?

+Yes, many CAD programs, measurement devices, and online calculators automatically perform unit conversions using fixed constants, reducing the risk of human error and streamlining workflows.

Why is understanding the conversion from inches to millimeters important?

+Precise conversion ensures dimensional accuracy in manufacturing, design, and engineering. Small errors can lead to assembly issues, material waste, and safety concerns, particularly in high-precision industries such as aerospace or healthcare.

How does the historical evolution of units influence modern measurement?

+The inch’s origin from ancient measuring systems and its subsequent standardization as exactly 25.4 mm reflects efforts to unify measurement standards across regions, facilitating international commerce and technological development.