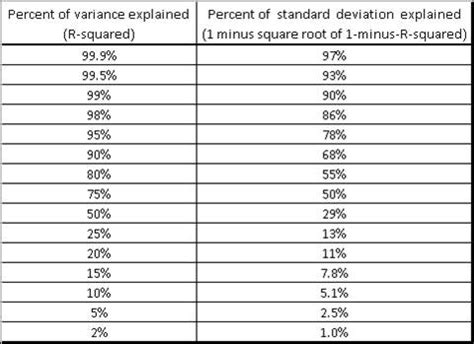

The concept of a good R squared value is a topic of much discussion in the realm of statistical analysis. R squared, also known as the coefficient of determination, is a statistical measure that represents the proportion of the variance in the dependent variable that is predictable from the independent variable(s) in a regression model. In essence, it provides an indication of the model's goodness of fit. The value of R squared ranges from 0 to 1, where 0 indicates that the model does not explain any of the variation in the dependent variable, and 1 indicates that the model explains all of the variation.

Key Points

- R squared values range from 0 to 1, with higher values indicating a better fit of the model to the data.

- A good R squared value depends on the context and the field of study, but generally, values above 0.7 are considered good.

- High R squared values can sometimes be misleading, especially if the model is overfitting the data.

- It's essential to consider other metrics, such as the F-statistic and residual plots, in addition to R squared when evaluating a model's performance.

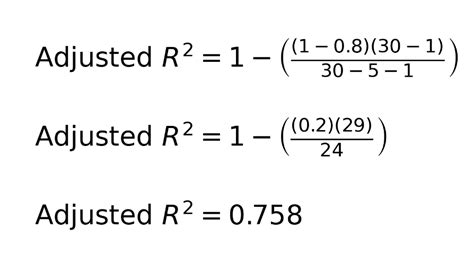

- R squared values can be affected by the number of predictors in the model, with more predictors typically leading to higher R squared values.

Interpreting R Squared Values

When interpreting R squared values, it’s crucial to consider the context of the analysis. In some fields, such as physics, R squared values of 0.9 or higher are common due to the strong underlying theoretical foundations. However, in fields like social sciences or biology, where the relationships between variables are often more complex and nuanced, lower R squared values (e.g., 0.5 or 0.6) might be more typical and still considered satisfactory. The acceptability of an R squared value also depends on the purpose of the model. For predictive models, higher R squared values are generally more desirable, as they indicate better predictive power. For exploratory models aimed at understanding relationships, even lower R squared values can provide valuable insights.

Evaluating Model Fit

Evaluating the fit of a regression model involves more than just looking at the R squared value. Other important metrics include the F-statistic, which tests the overall significance of the model, and residual plots, which can indicate whether the assumptions of linear regression (such as linearity, independence, homoscedasticity, normality, and no multicollinearity) are met. Additionally, considering the practical significance of the coefficients and the model’s ability to generalize to new data (through techniques like cross-validation) is essential. A high R squared value does not necessarily mean that the model is good or useful, especially if it is achieved by overfitting, which occurs when a model is too complex and fits the noise in the training data rather than the underlying pattern.

| R Squared Value Range | General Interpretation |

|---|---|

| 0.9 and above | Excellent fit, models most of the variation |

| 0.7 to 0.89 | Good fit, models a significant portion of the variation |

| 0.5 to 0.69 | Fair fit, models about half of the variation |

| 0.3 to 0.49 | Poor fit, models less than half of the variation |

| Below 0.3 | Very poor fit, models very little of the variation |

Advanced Considerations

For advanced practitioners, considerations such as the type of regression (linear, logistic, etc.), the inclusion of interaction terms, polynomial terms, or non-linear transformations, can significantly affect the interpretation of R squared. Moreover, in models with multiple predictors, the contribution of each predictor to the R squared value can be evaluated using techniques like partial F-tests or by examining the change in R squared when a predictor is added or removed from the model. This nuanced understanding can help in refining the model to better capture the underlying relationships between variables.

Common Misconceptions

One common misconception about R squared is that it measures the correlation between two variables. While related, correlation (measured by the Pearson correlation coefficient, for example) and R squared are distinct concepts. Correlation measures the strength and direction of a linear relationship between two variables, whereas R squared measures the proportion of variance in one variable that is predictable from one or more other variables. Another misconception is that higher R squared values always indicate better models. This overlooks the potential for overfitting, where models with many parameters may fit the training data very well (resulting in high R squared) but fail to generalize to new, unseen data.

What does a high R squared value indicate?

+A high R squared value indicates that the model explains a large proportion of the variance in the dependent variable. However, it does not necessarily mean the model is good or useful, as it could be overfitting the data.

How do I choose a good R squared threshold?

+The choice of a good R squared threshold depends on the context of the analysis, including the field of study, the purpose of the model, and the complexity of the relationships being modeled. Generally, values above 0.7 are considered good, but this can vary.

Can R squared be used for model selection?

+R squared can be one factor in model selection, but it should not be the only criterion. Other metrics, such as the F-statistic, residual plots, and considerations of model complexity and interpretability, should also be considered.

In conclusion, understanding and interpreting R squared values is a nuanced task that requires consideration of the model’s context, the data’s characteristics, and the purpose of the analysis. While high R squared values can indicate a good fit, they do not guarantee a useful or meaningful model. By combining R squared with other evaluation metrics and considering the broader context of the research or application, practitioners can develop and refine models that provide valuable insights and predictions.